Moving your data shouldn't feel like a high-stakes gamble with your company’s future. In 2026, the best data migration companies have moved beyond simple "extract-and-load" tools, offering sophisticated platforms that treat data as a living, breathing part of your business. Whether you're modernizing a legacy system or shifting to a multi-cloud environment, the goal is the same: zero data loss, minimal downtime, and total peace of mind.

This guide highlights the industry leaders who aren't just moving files, but are building the automated bridges that keep your business running smoothly during the transition.

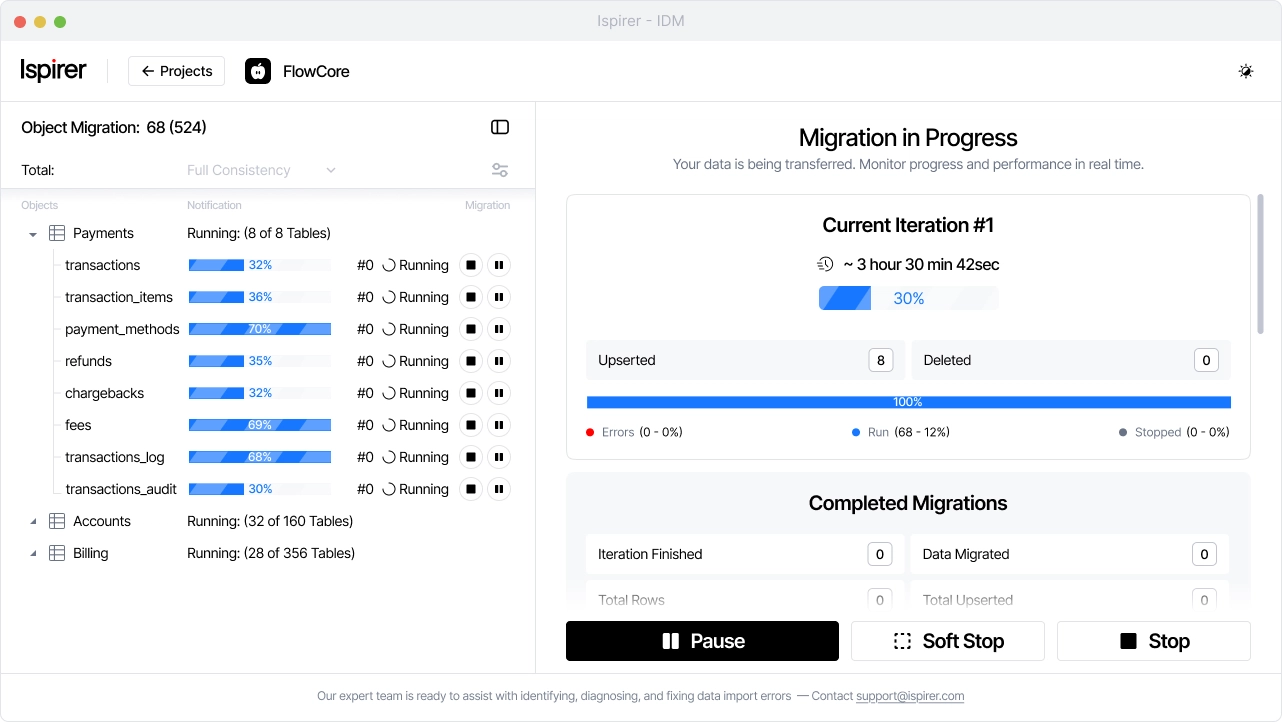

1. Ispirer

Best for: Enterprise-grade data migration with near-zero downtime and no middleware.

Ispirer Data Migrator is a professional solution for data migration, designed for companies that need to move large volumes of information quickly, securely, and without disrupting business operations. Our focus is not on the database infrastructure itself, but on what matters most – your data, its integrity, and availability during and after migration.

Unlike traditional tools, Ispirer Data Migrator requires no middleware and connects directly to your systems. This ensures faster migrations, reduced risk, and full control over the process.

Ispirer Data Migrator simplifies data migration at every stage – from initial data load to real-time synchronization. Built for enterprise-scale scenarios, it eliminates downtime, prevents data loss, and removes the need for manual adjustments.

Key Features

- Zero-downtime migration

Built-in replication and near real-time synchronization allow data to move without stopping business-critical systems.

- No middleware or third-party servers

Direct connection between source and target ensures complete security and control over your data.

- High-speed parallel loading

Multiple parallel streams accelerate data transfer, reducing migration time by up to 5x compared to traditional approaches.

- Support for all data types

Handles everything from standard data to JSON and BLOBs efficiently, without loss or corruption.

- Read-only access to the source

Migration is completely safe for your production system and requires no changes to the source environment.

- Continuous data synchronization

Changes in the source system are automatically replicated to the target until the final cutover.

- Automatic recovery and reliability

Built-in monitoring and auto-recovery prevent data loss in case of unexpected failures.

Why Ispirer Data Migrator

We built a solution that solves real business problems: reducing migration time, minimizing risk, and delivering predictable results. Trusted across critical industries – from payment processing and healthcare to logistics and supply chain management – Ispirer ensures fast, secure, and controlled data migrations.

Outcome: Fast, secure, and controlled migration of your data with minimal disruption to your business.

2. Fivetran

Best for: Fully automated ELT pipelines and centralizing SaaS data.

As a reliable data migration vendor, Fivetran is all about making things easy. Their philosophy is built on a "set it and forget it" experience for moving data from hundreds of different apps directly into your warehouse.

The solution: managed integration pipelines

Fivetran is a SaaS-based solution that manages the entire lifecycle of your data movement. It is particularly helpful for marketing and sales teams because it offers over 700 pre-built connectors for tools like Salesforce, Zendesk, and ERP systems. For developers, the biggest win is that Fivetran handles all API changes and schema updates automatically. If a source changes, Fivetran fixes the pipeline before you even notice.

- Idempotent data syncs

Ensures that your data stays consistent even if a sync is interrupted, preventing duplicates.

- Ready-for-AI tables

Automatically formats data into clean, GenAI-ready tables for immediate use in machine learning models.

- End-to-end governance

Includes built-in column masking and hashing to keep your data compliant with global privacy laws.

3. Talend (Qlik)

Best for: Data quality, scrubbing, and enterprise-scale governance.

Talend (now a core part of Qlik) is often ranked among the top-rated companies for data migration because they focus on "Data Health." Moving messy data into a new system just gives you a faster version of a mess. Talend makes sure your data is scrubbed, standardized, and verified before it ever reaches its destination.

The solution: Qlik Talend Cloud

Their solution offers a unified "Data Fabric" that combines integration with data integrity. It features a visual designer that allows you to map out complex transformations, ensuring that data from disparate sources is unified into a single, clean format. It’s perfect for companies that need to merge multiple legacy databases while maintaining strict quality standards.

- Data stewardship

A unique interface where your team can manually resolve data conflicts that the AI can't quite figure out.

- Automated trust scores

Provides an instant health check on your data, so you know exactly how reliable your information is.

- Pattern matching

Uses advanced logic to find and fix formatting errors (like inconsistent phone numbers or addresses) in real-time.

4. Informatica

Best for: Massive enterprise consolidations and global data management.

Informatica is the data migration services company that the world's largest organizations trust with their most sensitive, large-scale projects. When you're dealing with petabytes of data across global regions, you need the heavy-duty infrastructure that Informatica provides.

The solution: Intelligent Data Management Cloud (IDMC)

IDMC is an AI-powered platform that manages the entire data lifecycle. Their AI engine, CLAIRE, helps you discover where your most important data lives and maps out how it should move. It's an all-in-one solution that covers ingestion, quality, and security, making it a favorite for highly regulated industries like banking and healthcare.

- CLAIRE GPT

A natural-language AI that lets you talk to your data – you can literally ask it to design a migration pipeline for you.

- Enterprise-grade masking

Built-in tools that automatically anonymize PII (Personally Identifiable Information) during the move to ensure you stay compliant with GDPR and HIPAA.

- Master data management

Creates a "single source of truth" by merging overlapping data from dozens of siloed systems.

5. Airbyte

Best for: Open-source fans and teams building custom connectors.

If your team values flexibility and wants to avoid vendor lock-in, Airbyte is the data migration experts' choice. They are the open-source disruptors who have built a massive community around making data movement as transparent as possible.

The solution: Open source ingestion

Airbyte allows you to self-host your migration infrastructure, giving you total control over where your data goes. If they don't have a connector for a niche tool you use, their Connector Development Kit (CDK) makes it easy for your developers to build one in minutes. It’s a "Lego-style" approach to data migration that scales with your creativity.

- 600+ connectors

One of the largest libraries in the world, mostly maintained by a passionate community.

- No-code connector builder

An AI-assisted tool that reads API documentation and fills in the blanks for you.

- Zero-copy loads

Highly optimized pathways that reduce the cost and time of moving data into platforms like Snowflake.

6. Qlik Replicate

Best for: Real-time data streaming and zero-impact replication.

Qlik Replicate is one of the most respected data migration service providers for teams that can't afford a single second of downtime. It specializes in moving data while your systems are still running, making the "big switch" almost invisible to your users.

The solution: Log-based CDC

The magic of Qlik Replicate is its Change Data Capture (CDC) technology. Instead of asking your database for data (which can slow it down), it silently reads the transaction logs. This agentless approach means there is no software to install on your production servers, and your data stays perfectly in sync across different environments in real-time.

- Zero-impact migration

Move massive datasets without affecting the performance of your live applications.

- Bi-directional sync

Keeps your old and new systems in harmony, allowing for easy testing and a safe fallback if needed.

- Universal compatibility

Connects everything from ancient mainframes to the latest cloud-native databases.

7. Hevo Data

Best for: Agile teams who want a simple, fault-tolerant setup.

Hevo is a top data migration companies pick for those who need to get up and running right now. They’ve removed the complexity from the ETL process, focusing on a user-friendly experience that doesn't sacrifice power.

The solution: Fault-tolerant pipelines

Hevo is built to be "unbreakable." If the system encounters a row of data that doesn't fit the target schema, it doesn't stop the whole migration. It simply moves that record to a "waiting room" for you to fix later, while the rest of the data keeps flowing. This prevents small errors from turning into major project delays.

- Reverse ETL

Not only moves data into your warehouse but can also push insights back into your business tools like Hubspot.

- Auto-schema management

Automatically detects when you add new columns or tables at the source and updates the target for you.

- Real-time dashboarding

Lets you watch your data move in real-time with beautiful, easy-to-read logs.

8. Matillion

Best for: Cloud-native transformation and Snowflake/Databricks users.

Matillion functions like a data migration consulting company in software form. They are laser-focused on the cloud, helping you use the raw power of your destination (like Snowflake or BigQuery) to handle the heavy lifting of data transformation.

The solution: Data productivity cloud

Matillion uses a "push-down" architecture. Instead of moving data to a separate server to transform it, it pushes the logic directly into your cloud warehouse. This is much faster and cheaper for large datasets. It also treats data pipelines like code, offering full Git integration for developers who want to version-control their migrations.

- Agentic data engineering

Uses AI agents to automate the boring parts of pipeline management, like error handling and documentation.

- Semantic layer

Ensures that your data definitions stay the same across every tool in your company.

- No-code/High-code hybrid

Offers a visual designer for speed, but lets you drop into Python or SQL for custom logic.

9. Stitch

Best for: Fast and lightweight SaaS data migration for analytics professionals.

Stitch is designed for data analysts and engineers who need to centralize operational data from multiple SaaS applications into modern data warehouses quickly and reliably. It’s ideal for projects that require rapid, repeatable migrations without complex setup, helping analysts consolidate marketing, sales, or financial data for BI and reporting.

The solution: Simple ELT pipelines

Stitch provides a straightforward ELT framework that focuses on extracting data from diverse SaaS sources and loading it into your warehouse with minimal configuration. Engineers can quickly set up pipelines, while analysts immediately gain access to consolidated, ready-to-analyze data. Its lightweight architecture reduces operational overhead and accelerates migration projects.

- Singer-based connectors

Stitch uses the open-source Singer standard, which allows analysts and engineers to connect even niche SaaS tools or custom APIs without building and maintaining bespoke migration logic.

- Incremental data loading

The platform migrates only new or changed records, which significantly reduces migration time and bandwidth consumption when working with large operational datasets.

- Error handling and monitoring

Stitch automatically retries failed loads and provides transparent logging, allowing analysts to quickly identify and resolve data gaps during migration.

- Scalable and cost-effective

Usage-based pricing makes Stitch suitable for growing migration projects, where data volume increases gradually without forcing upfront infrastructure investments.

10. Google Cloud Data Fusion

Best for: Cloud-native migrations in Google Cloud for engineers and analysts.

Google Cloud Data Fusion is a managed service that allows engineers to design, monitor, and orchestrate data migration workflows across hybrid and cloud environments. Analysts benefit from consistent, validated datasets that are ready for analytics or BI immediately after migration. Its visual interface and integration with BigQuery make large-scale cloud migrations smooth and reliable.

The solution: Managed data migration pipelines

Data Fusion offers drag-and-drop pipeline orchestration for cloud-native and hybrid migration projects. Engineers can build complex workflows quickly, and analysts receive harmonized datasets without waiting for extensive ETL coding. The platform automatically manages data quality, schema evolution, and optimization for the cloud warehouse.

- Visual pipeline design

Engineers can design migration workflows visually, reducing configuration errors and accelerating the setup of complex cloud and hybrid data migrations.

- Hybrid connectivity

Data Fusion securely migrates data from on-prem systems or other cloud providers into Google Cloud, enabling gradual and low-risk modernization projects.

- Native BigQuery optimization

The platform automatically optimizes pipelines for BigQuery, ensuring migrated data is loaded efficiently and remains cost-effective at scale.

- Real-time and batch migration

Both streaming and batch workloads are supported, allowing analysts to access fresh data even while historical datasets are still being migrated.

11. StreamSets

Best for: Data migration in evolving environments with frequent schema changes.

StreamSets specializes in moving data from dynamic sources that often change their schema. Engineers benefit from pipelines that automatically adapt to these changes, while analysts can trust that the migrated data is consistent and validated. This makes it ideal for SaaS-heavy environments or operational systems undergoing rapid change.

The solution: Schema-aware data pipelines

StreamSets continuously monitors source systems and automatically adapts pipelines to schema changes. This ensures uninterrupted migration even in fast-evolving data environments. Engineers can deploy pipelines across cloud and on-prem systems, and analysts get reliable, ready-to-use datasets.

- Schema drift detection

StreamSets continuously detects schema changes in source systems and adapts pipelines automatically, preventing broken migrations in fast-changing environments.

- Built-in validation

Data is validated during migration to ensure completeness and correctness, which reduces the risk of downstream reporting errors for analysts.

- Hybrid deployment

Engineers can run StreamSets pipelines across cloud and on-prem environments, making it suitable for phased or transitional migration strategies.

- Real-time and batch support

The platform supports both continuous streaming and bulk migrations, allowing teams to combine historical backfills with live operational data movement.

12. Rivery

Best for: Managed data migration with orchestration and transformation for engineers and analysts.

Rivery combines ETL/ELT, pipeline orchestration, and transformation in one platform. Engineers can automate repetitive tasks and schedule migrations reliably, while analysts receive clean, transformed datasets immediately. It’s ideal for cloud-first organizations and analytics-driven migrations.

The solution: End-to-end data migration platform

Rivery provides a fully managed environment for orchestrating complex migrations. Engineers configure pipelines once, and the platform handles scheduling, error recovery, and incremental data loading. Analysts benefit from immediate access to standardized data for reporting or machine learning.

- Automated orchestration

Rivery schedules and orchestrates migration pipelines automatically, removing the need for manual execution and reducing operational overhead for engineers.

- Incremental migration support

Only new or modified data is migrated, which minimizes load on source systems and shortens migration windows.

- Built-in transformations

Data can be cleansed, enriched, and standardized during migration, so analysts receive analysis-ready datasets immediately.

- Audit and lineage tracking

Full visibility into data origin, movement, and transformation helps analysts trust the migrated data and supports governance requirements.

13. Keboola

Best for: Governed and repeatable migrations for analytics-driven organizations.

Keboola provides a modular platform for orchestrating migrations across multiple sources and systems. Engineers can version and reuse pipelines, while analysts receive consistent and auditable datasets. It emphasizes governance, compliance, and reproducibility, making it ideal for regulated industries.

The solution: Modular data migration platform

Keboola uses modular components to migrate and transform data reliably. Engineers can combine prebuilt connectors with custom transformations, ensuring that pipelines can be rerun or rolled back. Analysts benefit from complete lineage tracking and compliance-ready datasets for analytics.

- End-to-end lineage tracking

Keboola provides complete visibility into how data moves and changes during migration, allowing analysts to verify accuracy and consistency.

- Version-controlled pipelines

Migration workflows are versioned and reproducible, enabling engineers to safely rerun or roll back migrations when needed.

- Compliance-ready

Built-in governance features support regulatory requirements such as GDPR and SOC2, making migrations suitable for regulated industries.

- Multi-system scalability

The platform orchestrates migrations across many systems simultaneously, helping organizations consolidate data from diverse sources.

14. Denodo

Best for: Complex enterprise migrations across heterogeneous systems.

Denodo provides a logical data layer to unify and migrate data from disparate systems. Engineers can perform migrations with minimal load on production systems, while analysts receive consistent, harmonized datasets for reporting or modeling. Perfect for multi-cloud or hybrid environments.

The solution: Logical data migration platform

Denodo creates a virtualized layer to move and harmonize data without heavy extraction. Engineers maintain control over pipelines, and analysts can immediately work with unified datasets. Security and compliance are built-in, making it suitable for sensitive enterprise data.

- Unified data modeling

Denodo standardizes data from heterogeneous systems into a consistent logical model that analysts can use immediately after migration.

- Minimal source impact

Virtualized data access reduces the load on production systems, allowing migrations to run without disrupting business operations.

- Enterprise-grade security

Encrypted data movement and fine-grained access controls protect sensitive information throughout the migration process.

- Flexible deployment

Denodo supports cloud, on-prem, and hybrid environments, making it suitable for complex enterprise migration architectures.

15. HVR (by Fivetran)

Best for: High-volume migrations requiring continuous synchronization.

HVR is designed for engineers migrating large datasets in real-time. Analysts benefit from fully synchronized, accurate data throughout the migration, making it ideal for modernization, multi-region, and cloud-to-cloud projects.

The solution: Log-based data migration

HVR leverages real-time Change Data Capture (CDC) to ensure low-latency, high-throughput migrations. Engineers can perform zero-downtime cutovers, while analysts get up-to-date, consistent datasets immediately.

- High-throughput migration

HVR efficiently migrates massive datasets with low latency, even in high-volume enterprise environments.

- Data validation

Built-in checks ensure that migrated data remains complete and consistent, which is critical for analyst trust.

- Zero-downtime cutovers

Source and target systems remain synchronized until final switchover, enabling seamless migrations without service interruption.

- Cross-platform support

HVR works across multiple databases, platforms, and clouds, supporting complex modernization and multi-region migrations.

16. Solix Technologies

Best for: Enterprise-scale migrations with governance for engineers and analysts.

Solix provides automated migration workflows for large datasets, allowing engineers to focus on orchestration while analysts receive clean, standardized data. Compliance and governance are built into every migration.

The solution: Automated enterprise data migration

Solix handles extraction, validation, and loading automatically, reducing downtime and human error. Analysts immediately access accurate datasets for reporting or analytics, while engineers benefit from built-in lineage and audit capabilities.

- Full migration automation

Solix automates extraction, validation, and loading, reducing human error and accelerating large-scale migration projects.

- Minimal downtime strategies

Migration workflows are designed to minimize business disruption, even when transferring very large datasets.

- Governance and compliance

Detailed lineage tracking and audit logs support regulatory compliance and internal governance requirements.

- Scalable for diverse sources

The platform handles structured and semi-structured data from multiple systems within a single migration framework.

17. K2view Data Product Platform

Best for: Entity-based migrations for engineers and analysts.

K2view focuses on migrating business entities rather than raw tables, preserving operational continuity. Engineers can manage phased migrations, and analysts get immediate access to unified datasets.

The solution: Entity-based data migration

K2view aggregates related records into business entities, allowing controlled, phased migration. Engineers monitor and adjust pipelines in real time, and analysts receive ready-to-use data for analytics or operational workflows.

- Business entity modeling

K2view migrates logically grouped business entities, preserving relationships between records during migration.

- Phased and on-demand strategies

Engineers can migrate data incrementally or all at once, depending on operational and business requirements.

- In-flight transformation

Data is cleansed and enriched during migration, so analysts receive usable datasets immediately.

- Observability and control

Real-time monitoring allows engineers to track progress and intervene if needed.

18. Dell Boomi

Best for: Hybrid cloud migrations for engineers and integration specialists.

Dell Boomi combines extraction, transformation, and orchestration in a single platform. Engineers can create and monitor pipelines reliably, while analysts immediately get consistent, ready-to-use datasets.

The solution: Managed data migration pipelines

Boomi provides low-code, drag-and-drop pipelines for hybrid and cloud environments. Engineers can integrate custom transformations, and analysts can use harmonized data instantly for analytics or BI.

- Pre-built connectors

Boomi includes connectors for SaaS apps, databases, and APIs, reducing the effort required to start migrations.

- Low-code workflows

Visual pipeline design allows engineers to build and modify migration workflows quickly.

- Transformation support

Data can be standardized and enriched during migration, making it suitable for analytics immediately.

- Orchestration and monitoring

Centralized scheduling and monitoring ensure migrations run reliably and transparently.

19. Apache NiFi

Best for: Flexible, open-source migrations for engineers and developers.

NiFi automates complex workflows for moving, routing, and transforming data. Engineers can design highly customized pipelines, while analysts get reliable, consistent datasets for batch or streaming workloads.

The solution: Flow-based data migration engine

NiFi uses a visual interface to orchestrate flows from multiple sources to multiple targets. Engineers gain full control over routing, transformations, and monitoring, and analysts receive validated, consistent data for downstream use.

- Visual flow design

Engineers can visually design and monitor complex migration workflows, making adjustments in real time.

- Multi-protocol support

NiFi supports a wide range of protocols and systems, enabling migrations from highly heterogeneous sources.

- Streaming and batch support

Both real-time data flows and bulk historical migrations are handled within the same framework.

- Provenance tracking

Detailed audit trails allow organizations to trace every piece of data through the migration process.

20. Striim

Best for: Real-time data migration and continuous synchronization across hybrid and multi-cloud environments.

Striim is an enterprise-grade data migration platform focused on moving and synchronizing operational data in real time. It is designed for scenarios where data must be migrated continuously with minimal latency and no downtime, such as cloud modernization, analytics enablement, or cross-platform replication. Striim supports both initial bulk data loads and ongoing change capture, allowing organizations to migrate data while systems remain fully operational.

The solution: Real-time streaming data pipelines

Striim uses streaming and log-based Change Data Capture (CDC) to migrate data incrementally as changes occur. This approach enables safe, zero-interruption migrations by keeping source and target systems synchronized throughout the transition, rather than relying on disruptive batch transfers.

- Log-based CDC

Captures inserts, updates, and deletes directly from transaction logs, minimizing load on source systems during migration.

- Initial load plus continuous sync

Supports full historical data migration followed by real-time replication until final cutover.

- In-flight transformations

Allows filtering, enrichment, and restructuring of data while it is being migrated.

- Hybrid and multi-cloud support

Moves data between on-premises systems and cloud platforms such as Snowflake, BigQuery, Databricks, AWS, and Azure.

- Low-latency, high-throughput pipelines

Designed for large-scale enterprise data volumes with near real-time delivery.

Ready to build your migration roadmap?

Choosing between data migration companies is about more than just checking features; it's about finding a partner that understands your specific technical hurdles. In 2026, the best moves are the ones you barely notice – where your data arrives clean, secure, and ready to work immediately.

At Ispirer, we love the "hard" migrations. We help you move beyond the limits of basic sync tools by providing a solution that actually understands the code governing your data. Whether you're dealing with decades of legacy logic or moving to a high-performance cloud stack, our experts are here to ensure your data and your logic arrive exactly where they need to be.

Ready to start your journey?